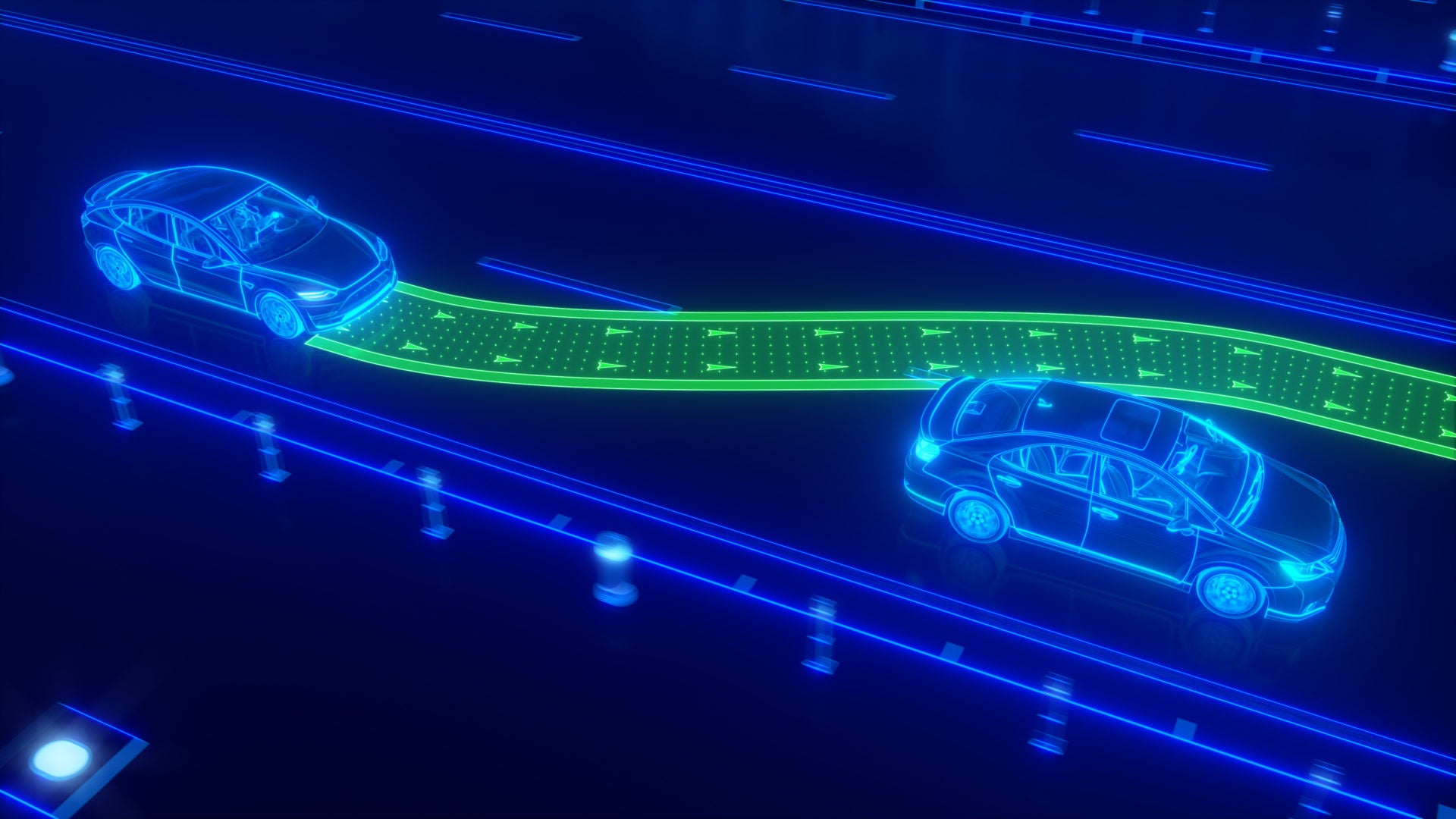

People are more likely to program an autonomous vehicle to behave cooperatively with other cars than if they were driving themselves, a US study suggests.

It surprised researchers who, based on prior work, expected the involvement of AI in cars to make people “more selfish”.

The study involved 1,225 volunteers taking part in computerised experiments involving a social dilemma – a situation where individuals benefit from a selfish decision unless everyone in the group makes a selfish decision – with autonomous vehicles.

Based on the results, the researchers concluded people are less selfish programming autonomous vehicles because the same short-term rewards are less evident.

In other words, a human driver choosing not to let a car into a queue ahead of them in a traffic jam will be instantly rewarded by a higher position in the queue.

However, those programming a vehicle in an environment that is detached from the same situation, such as an office, are less likely to be swayed by the chance of an instant reward.

How well do you really know your competitors?

Access the most comprehensive Company Profiles on the market, powered by GlobalData. Save hours of research. Gain competitive edge.

Thank you!

Your download email will arrive shortly

Not ready to buy yet? Download a free sample

We are confident about the unique quality of our Company Profiles. However, we want you to make the most beneficial decision for your business, so we offer a free sample that you can download by submitting the below form

By GlobalDataBeyond programming autonomous vehicles

The joint study between the US Combat Capabilities Development Command’s Army Research Laboratory (ARL), the Army’s Institute for Creative Technologies and Northeastern University published their findings in the Proceedings of the National Academy of Sciences.

The researchers said the results can be generalised beyond the domain of autonomous vehicles.

“Autonomous machines that act on people’s behalf — such as robots, drones and autonomous vehicles — are quickly becoming a reality and are expected to play an increasingly important role in the battlefield of the future,” said ARL’s Dr Celso de Melo, who led the research.

“People are more likely to make unselfish decisions to favour collective interest when asked to program autonomous machines ahead of time versus making the decision in real-time on a moment-to-moment basis.”

However, it is unclear whether this mentality will apply to an individual’s self-interest against a collective interest, said De Melo.

“For instance, should a recognition drone prioritize intelligence gathering that is relevant to the squad’s immediate needs or the platoon’s overall mission?” asked de Melo. “Should a search-and-rescue robot prioritize local civilians or focus on mission-critical assets?”

The UK government has committed to having driverless cars on the road by 2021.

Read more: Who’s to blame in an autonomous car accident? A legal expert considers three scenarios