The growth in artificial intelligence and machine learning technology means that anxieties are on the rise that robots will take over the world.

To ease these concerns, a team from MIT’s computer science and artificial intelligence laboratory (CSAIL) and Boston University, have created a feedback system that lets people correct robot mistakes using their brains. The project was partly funded by Boeing and the Natural Science Foundation.

CSAIL director, Daniela Rus, explained the inspiration behind the project, saying:

“Imagine being able to instantaneously tell a robot to do a certain action, without needing to type a command, push a button or even say a word. A streamlined approach like that would improve our abilities to supervise factory robots, driverless cars, and other technologies we haven’t even invented yet.”

The aim of the project is to make working with robots easier, so they can do whatever we are thinking.

Whilst previous efforts have focused on natural language programming, so AI systems and robots can learn and understand language to make their actions more accurate, Rus’ team wanted to make the robot-human experience more natural by focusing on brain signals called “error-related potentials” (ErrPs), which are generated when humans notice a mistake.

How well do you really know your competitors?

Access the most comprehensive Company Profiles on the market, powered by GlobalData. Save hours of research. Gain competitive edge.

Thank you!

Your download email will arrive shortly

Not ready to buy yet? Download a free sample

We are confident about the unique quality of our Company Profiles. However, we want you to make the most beneficial decision for your business, so we offer a free sample that you can download by submitting the below form

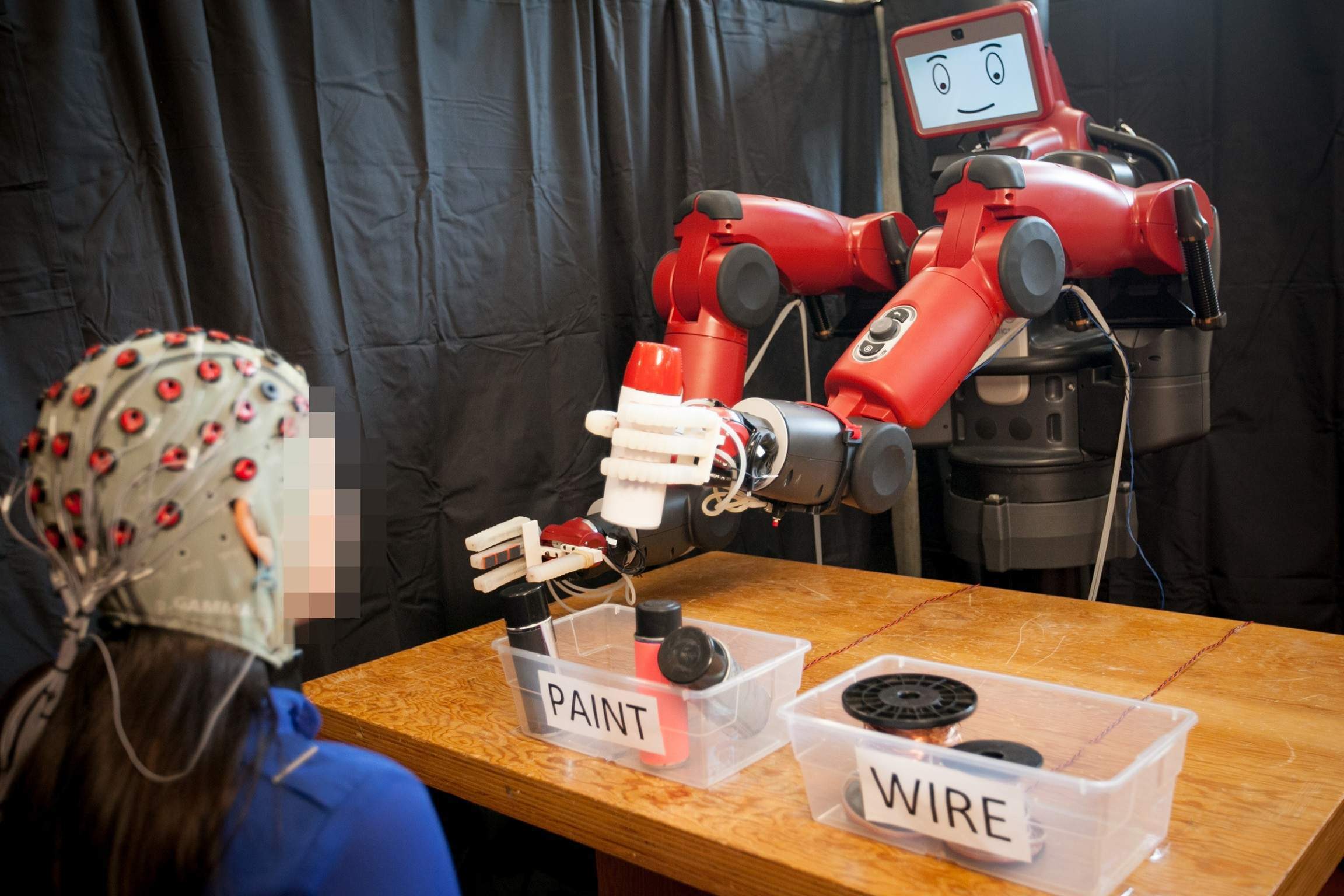

By GlobalDataThe team created a system using data from an electroencephalography (EEG) monitor that records brain activity. This system detects when the brain notices an error as a robot performs a task, using a machine-learning algorithm that can classify brain waves.

So, our brains notice a mistake, which sends ErrPs signals, which are detected by the EEG monitor. The system notices the decision and sees that the brain disagrees with what the robot is doing. Then, the system alerts the robot, which changes course.

“As you watch the robot, all you have to do is mentally agree or disagree with what it is doing,” said Rus. “You don’t have to train yourself to think in a certain way — the machine adapts to you, and not the other way around.”

Though the technology is still in its the first stages and can only recognise binary decisions, this could be improved upon with more research so that one day we could control, and work with, robots in more intuitive ways.

In addition, the technology could be developed for use by people who can’t communicate verbally, such as stroke victims.

Wolfram Burgard, a professor of computer science at the University of Freiburg who was not involved in the research, said:

“This work brings us closer to developing effective tools for brain-controlled robots and prostheses. Given how difficult it can be to translate human language into a meaningful signal for robots, work in this area could have a truly profound impact on the future of human-robot collaboration.”