Artificial intelligence (AI) is big business and its reach is expected to increase even further. Thousands of companies, from huge corporations to fledgling startups, are pursuing AI technology in some form, and the industry will only get bigger – the global AI market is estimated to reach up to $190bn by 2025, at a compound annual growth rate of more than 36.2%.

Media coverage (including this publication) frequently focuses on AI as some future threat, from fears that a robot might steal your job (will it? check here) to the modern trolley-problem of self-driving cars.

But noise about the coming revolution distracts from the extent to which AI is already embedded in human lives, and how its role is designed and managed.

New research from RSA and YouGov shows that the British public has a limited understanding of how AI is currently used, and that they generally don’t trust the technology.

How aware are people of AI in their lives?

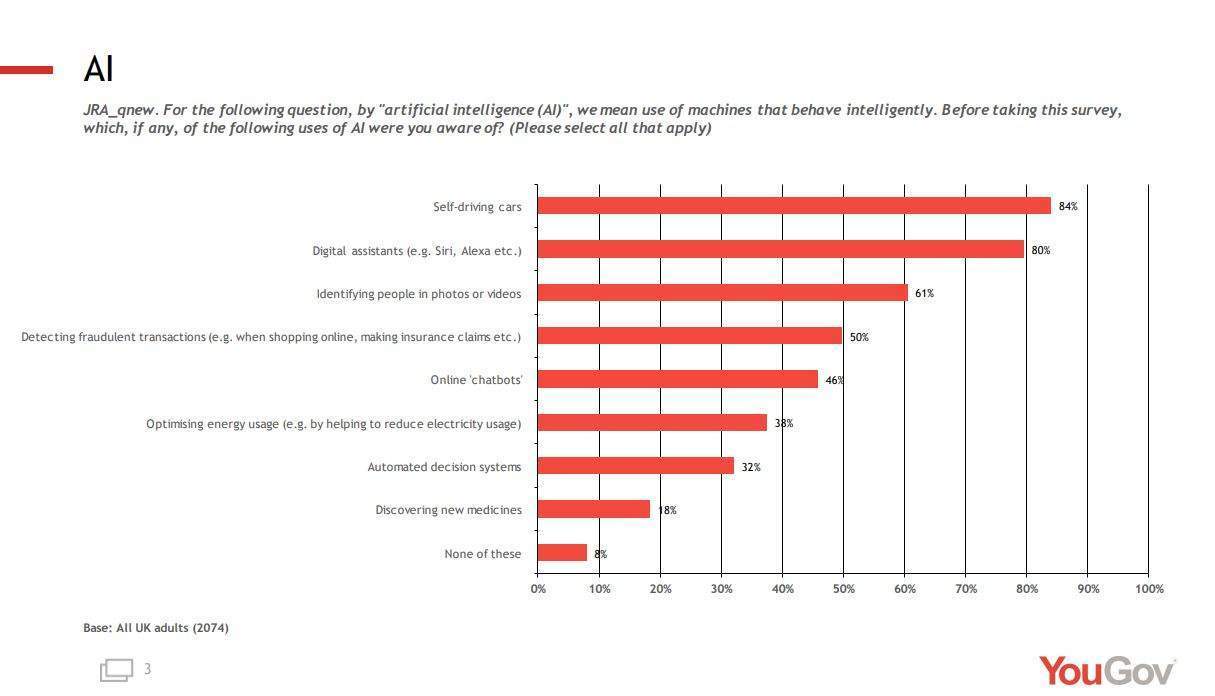

RSA, a charity committed to finding practical solutions to social problems, defines AI as “machines that can perform tasks generally thought to require intelligence”. The areas where people were most aware of AI being used are self-driving cars (84%) and digital assistants like Siri or Alexa (80%). Unsurprisingly, this indicates that media coverage (in the case of self-driving cars) or personally interacting with the system (in the case of digital assistants) strongly influences the public’s ability to spot AI at work.

Interestingly, however, only 46% of respondents were aware of AI being used in online “chatbots”. This is despite the fact that a great many of these respondents will likely have interacted with said bots, which many companies (potentially a majority in developed countries) now for customer service lines, to make recommendations, or to streamline ordering.

How well do you really know your competitors?

Access the most comprehensive Company Profiles on the market, powered by GlobalData. Save hours of research. Gain competitive edge.

Thank you!

Your download email will arrive shortly

Not ready to buy yet? Download a free sample

We are confident about the unique quality of our Company Profiles. However, we want you to make the most beneficial decision for your business, so we offer a free sample that you can download by submitting the below form

By GlobalDataCompanies known to use chatbots include Starbucks, Staples, Whole Foods, Spotify, Sephora and Mariott International. Many of these bots use channels like Facebook Messenger, Slack or Kik. The low level of knowledge about the positioning of AI within them (compared to e.g. Siri) suggests that the type of interface used matters for public awareness.

How aware are they of automated decision-making systems in particular?

RSA’s research becomes all the more interesting as it digs into automated decision systems. These are computer systems that inform or decide on a specific course of action. A crucial caveat: not all automated decision systems use AI, but many draw on machine learning to improve the accuracy of their decisions or predictions.

Automated decision systems have been used in the private sector for many years, and are increasingly used by public bodies. However, RSA and YouGov’s study found that only 32% of people were aware of automated decision-making systems in general.

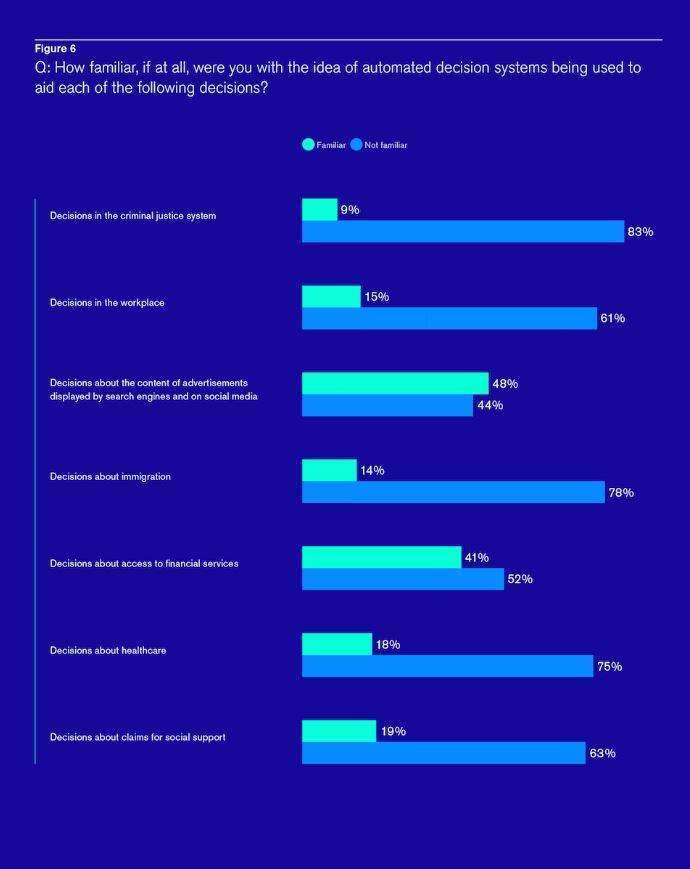

Awareness drops for the use of automated decision-making in public services such as healthcare (18%), immigration decisions (14%) and the criminal justice system (9%). Automated decisions in the workplace also ranked low (15%).

What about trust?

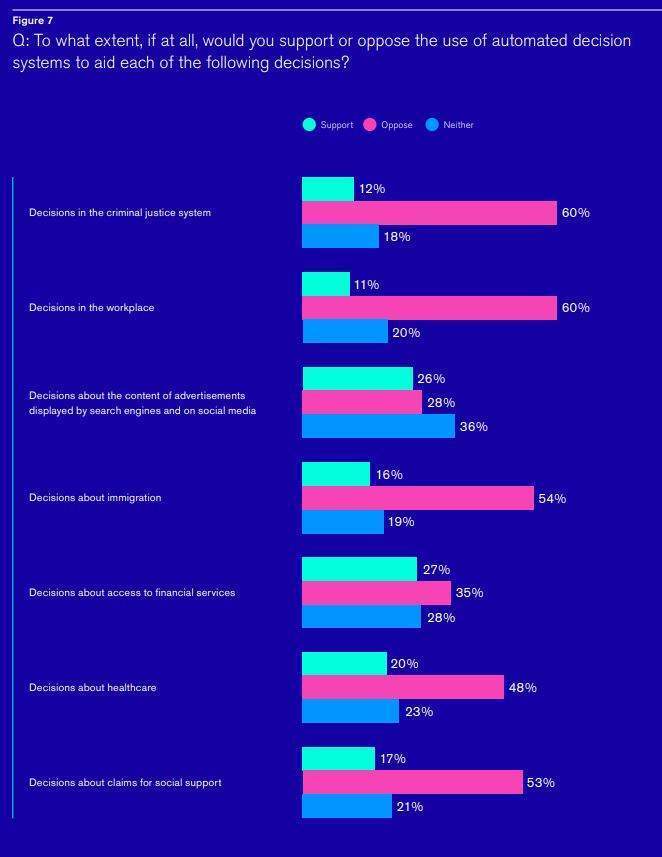

These low levels of awareness were paired with low levels of trust. Respondents supported the use of automated decision-making at a far lower rate than they opposed it, or didn’t know. More than half of respondents actively opposed such decision-making in all the posited situations, apart from healthcare and financial services (which were opposed by 48% and 35% respectively).

When asked about their distrust, 60% said that it came from fears that AI does not have the empathy required to make important decisions that affect individuals and communities. Significant proportions were also concerned that automated decision-making reduced peoples’ responsibility and accountability for the decisions they then implement (31%) and that there was a lack of adequate oversight or government regulation of automated decisions to protect people if an unfair decision is made (26%). Worries that automated decisions could reinforce biases that already exist in systems (e.g. racial bias in the criminal justice system) were an issue for 18%.

These findings raise certain questions. How much automated decision-making goes on in these areas? What are the ethical considerations are thrown up by a lack of awareness or public distrust? What is needed to resolve these issues?

Automated decision systems in the criminal justice system

Taking the criminal justice system – the area the public had the least awareness over – as an example, it is easy to see why most people don’t know how AI is being used, or how. Different policing bodies use different mechanisms, which frequently aren’t announced or picked up by the media until they’ve been running for some time. However, it is known that automated systems are used, or have been used, for predicting the areas where crimes might be committed and for taking decisions about keeping individuals in custody by several UK police forces at various times.

Durham police developed the Harm Assessment Risk Tool (HART) over the course of five years. HART uses AI to predict whether suspects are at a low, moderate, or high risk of committing further crimes in a two year period. This then informs custody decisions. Data from 34 different categories, including age, gender, offence history and postcode, are used to predict future outcomes. Machine learning training came from 104,000 custody events from 2008-12.

Police in Durham started using HART in May 2017. The force reported that the system was 88% accurate in high-risk predictions, and 98% accurate in relation to low-risk predictions. Big Brother Watch, a civil liberties and privacy watchdog, points out that, while these figures seem attractive, the 12% inaccuracy rate is still a worryingly high number of suspects detained incorrectly.

HART was also criticised for its use of suspects’ postcodes, which might reinforce existing socio-demographic biases towards viewing those in poorer areas as more criminal and, in turn, amplify existing patterns of offending. Durham police are now changing this part of the HART algorithm.

South Wales Police, London’s Met and Leicestershire forces have all been trialling automated facial recognition technology in public places, claiming that it had the potential to crack down on certain types of crime. These initiatives made headlines earlier this month when a Freedom of Information request made to South Wales Police revealed that 92% of matches made by their facial recognition cameras were incorrect.

What does all this mean ethically?

The rate of inaccuracy in South Wales Police’s trials caused Elizabeth Denham, the UK’s Information Commissioner, to threaten legal action against police forces if they didn’t put adequate checks and balances in place when using new technology.

Denham said:

For the use of facial recognition technology to be legal, the police forces must have clear evidence to demonstrate that the use facial recognition technology in public spaces is effective in resolving the problem that it aims to address, and that no less intrusive technology or methods are available to address that problem.

The anger felt by Denham and the public over news of automated decisions being used ineffectively in the justice system cuts into problems with how such systems are currently designed, implemented and overseen.

Instinctively, we feel that we should be made aware of when important decisions are taken by a machine, rather than by a human, and that we feel especially uneasy about automated decisions being made when they have the potential to impact the life of an individual in a significant way (e.g. policing or social services decisions).

It seems likely that this unease comes from a feeling that decisions are being made in a way that we cannot wholly or understand. Additionally, it can be hard to conceptualise algorithms or machines upholding more abstract value-based ideas like fairness or protecting the vulnerable. Almost all of the automated decision systems currently in place have been designed by small groups, making it hard to tell whether or not they reflect that values of society as a whole.

So, what should be done?

The answer to these problems, the RSA argues, is not less automated decision systems, but more open dialogue about them. As AI technology develops and is increasingly used in public life, it should be discussed and evaluated by citizens, to ensure that decisions on behalf of the many are not being effectively made by only a few.

To achieve suitable levels of public discourse, companies and public bodies must be transparent about the AI that they use, and should engage with citizens in its development. This will allow citizens to collectively resolve concerns, such as appropriate trade-offs between privacy and security.

The benefits of AI and automated decision systems should not be overlooked. They represent a chance for genuine innovation and for many societal problems to be dealt with effectively. But in order for those goals to be reached, ordinary citizens must be aware of the systems and able to trust them.