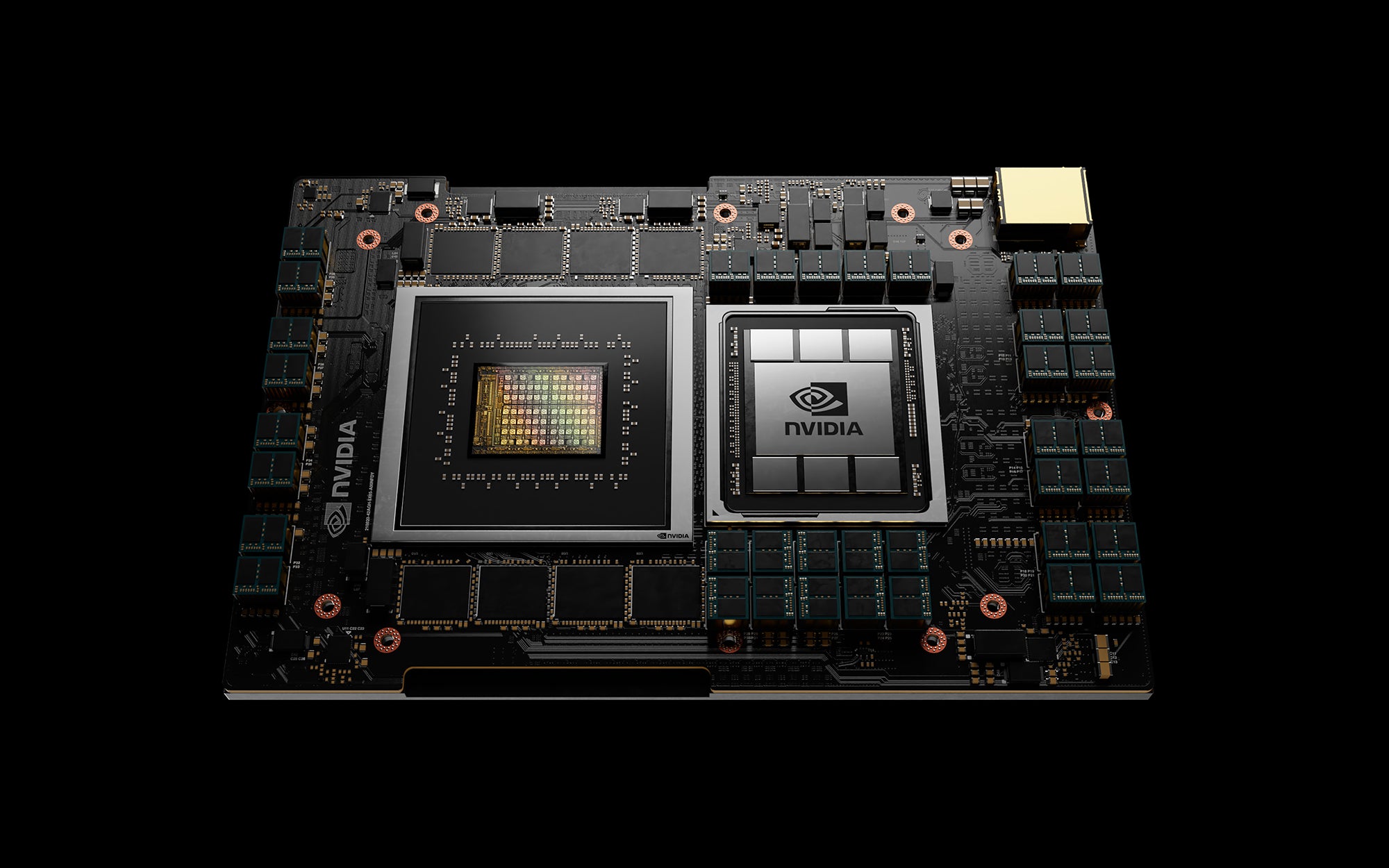

Graphics processing giant Nvidia has used its annual conference to announce a new Arm-based processor named “Grace”, intended for use in massive AI neural networks and supercomputing. The first Grace chips will ship in 2023.

Livestreaming his keynote speech from his kitchen, Nvidia CEO Jensen Huang said that the new processor is named in honour of US Navy Rear Admiral Grace Hopper, a major figure in the early days of computing who is usually credited with the idea of machine-independent programming language and much of the work underpinning COBOL. Admiral Hopper, who died in 1992, has already had a US warship, a supercomputer and a college at Yale University named after her.

Nvidia describes Grace as “designed from the ground up for accelerated computing”. It features new NVLink networking designed to give it bandwidth to communicate with graphics processing units (GPUs) included in the same system and special high-bandwith LPDDR5x memory. The idea is that these additions to the new Arm Neoverse processor cores of the Grace CPU will avoid bottlenecks and let CPUs and GPUs work together smoothly in high-performance computing and AI tasks.

Nvidia was pleased to announce that both the Los Alamos National Laboratory in the US and the Swiss National Supercomputing Centre have plans to build systems using Grace processors. The Los Alamos lab, famously the birthplace of the atomic bomb, nowadays carries out research into many other fields. It still remains involved in the management of the US nuclear arsenal, however, a task which requires massive amounts of computer power in the post-nuclear-testing era.

Nvidia sees Grace-powered systems as also being hugely useful in building and training the increasingly deep and complex neural networks of tomorrow, the technology behind the current craze for machine-learning AI. Chip makers in general hope that AI will keep their industry on the pleasing growth trajectory it has now enjoyed for decades, and this is even truer of GPU specialists like Nvidia – graphics processors being especially useful in handling AI tasks.

There are more problems in making AI work for the real world than simply providing enough computing power, but Huang and Nvidia are clearly determined that if a new “AI winter” does come (there were AI winters in the late 1970s and the early 1990s) it won’t be for a lack of the right silicon to deepen the neural nets.

Nvidia also announced other bets on a continued AI summer at GTC, revealing that a New Nvidia Drive Atlan system-on-chip (SoC) will appear from 2025, superseding the Orin SoC planned for 2022. Both are intended for use in self-driving cars, and as such will have to be capable of various AI tasks such as computer vision. So far an inability to recognise things such as red traffic lights has been a problem for autonomous vehicles, but in the absence of any new infrastructure it is a problem that will have to be solved.

Apart from the new car SoCs, Huang and Nvidia also announced a new BlueField-3 data processing unit and DGX SuperPODS – systems deploying large numbers of the company’s A100 GPUs – purchasable for $60m.