Neuromorphic computing may be the next key development following on from generative AI, it was suggested on a recent GlobalData webinar.

What are the game-changing innovations in AI after Generative AI? explored the disruptive AI innovations of the future, their potential impacts and the companies that are at the forefront of the new innovations.

Generative AI

Generative AI is one of a number of recent AI breakthroughs, with tools like ChatGPT making these technologies available to a mass audience. Such platforms allow users to enter prompts in plain language and can answer questions, write computer code and even whole articles in response.

ChatGPT reached 100 million users in two months, faster than TikTok, Netflix or Spotify and boats an average use time of 8-10 minutes, similar to that of Facebook or Youtube. The appeal of generative AI goes far beyond general public use cases, though.

NASA is utilising the technology to build spaceship parts, and NVIDIA is pioneering the its use in drug research and development. It is however limited in scalability, as current AI tools take large amounts of computing power to operate. This means that the servers processing requests are often off-site due to the space requirements, leading to latency in responses. It also leads to extremely high energy usage, which is both expensive and environmentally harmful.

So, what’s next?

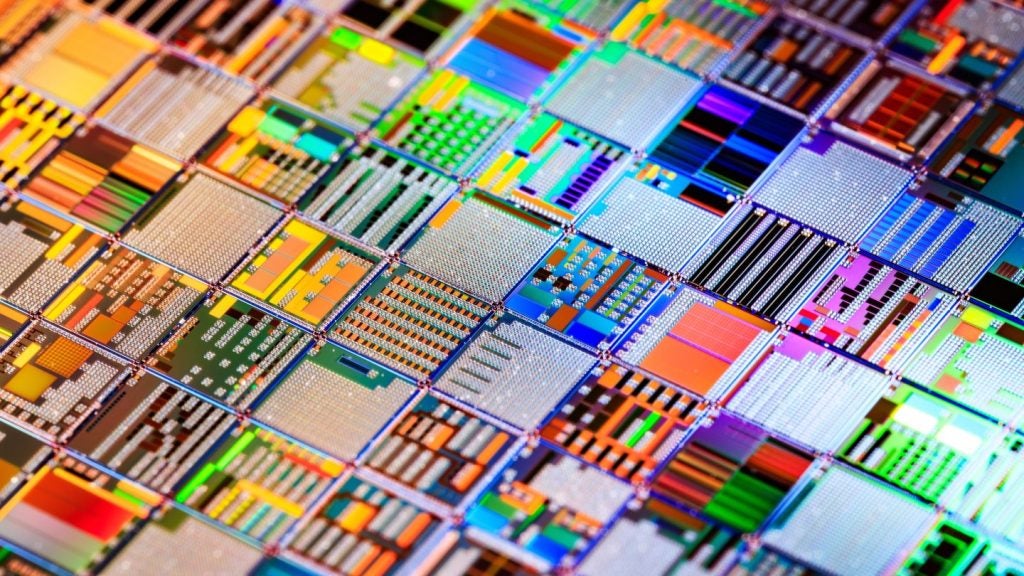

One of the solutions to these problems that has been gaining traction recently is a move towards neuromorphic computing. This is a way of building computers modelled on the human brain. Though perhaps philosophically reductive, the brain can be abstracted on a technical basis into a collection of computation units (neurons) connected by fast-access local memory (synapses).

How well do you really know your competitors?

Access the most comprehensive Company Profiles on the market, powered by GlobalData. Save hours of research. Gain competitive edge.

Thank you!

Your download email will arrive shortly

Not ready to buy yet? Download a free sample

We are confident about the unique quality of our Company Profiles. However, we want you to make the most beneficial decision for your business, so we offer a free sample that you can download by submitting the below form

By GlobalDataBuilding a computer system in a similar way can increase the density of computing power, meaning much lower energy costs and a significantly smaller storage space. The human brain draws around 12W of power on a continuous basis, several times lower than even a laptop computer’s 60W. It is also able to respond to stimuli in real-time, growing and rearranging connections between neurons as it is exposed to new information.

Whilst the technology is far from the 100 billion neurons found in the human brain currently, Intel has developed a neuromorphic computing board with 100 million neuron-equivalent nodes. All of this power fits inside a chassis the size of five standard servers.

What can neuromorphic computing do?

This kind of computing will not necessarily be useful for the same applications as generative AI, at least in the beginning. GlobalData predicts that the primary functions of neuromorphic computing will be in more human-like tasks: improving the connectivity between prosthetics and human brains, improving autonomous vehicles’ driving and improving customer service.

In the long term, this technology will likely see greater integration with generative AI and neural networks, as has already begun with IBM’s TrueNorth chip. TrueNorth features 1 million digital neurons connected by 256 million digital synapses, with the capacity for neural network integration in order to allow AI models to learn more rapidly and power efficiently.

Job postings for roles in neuromorphic computing have accelerated since mid-June of 2021, and 2022 saw an increase in senior postings in the field compared to previous years. Intel and IBM are unsurprisingly two of the largest hirers (first and third respectively), alongside Ericsson (second) and HP (fourth).

Whether the technology focuses on generative AI integration or charts its own course, there is no doubt that neuromorphic computing will be a key factor in shaping the future of technology.