US cybersecurity company Mandiant has warned that despite current usage of AI in online malicious activity being low, threat actors remain largely interested in leveraging the technology.

Having tracked activity since 2019, Mandiant claims that the lowest usage of AI by threat actors was in social engineering cases.

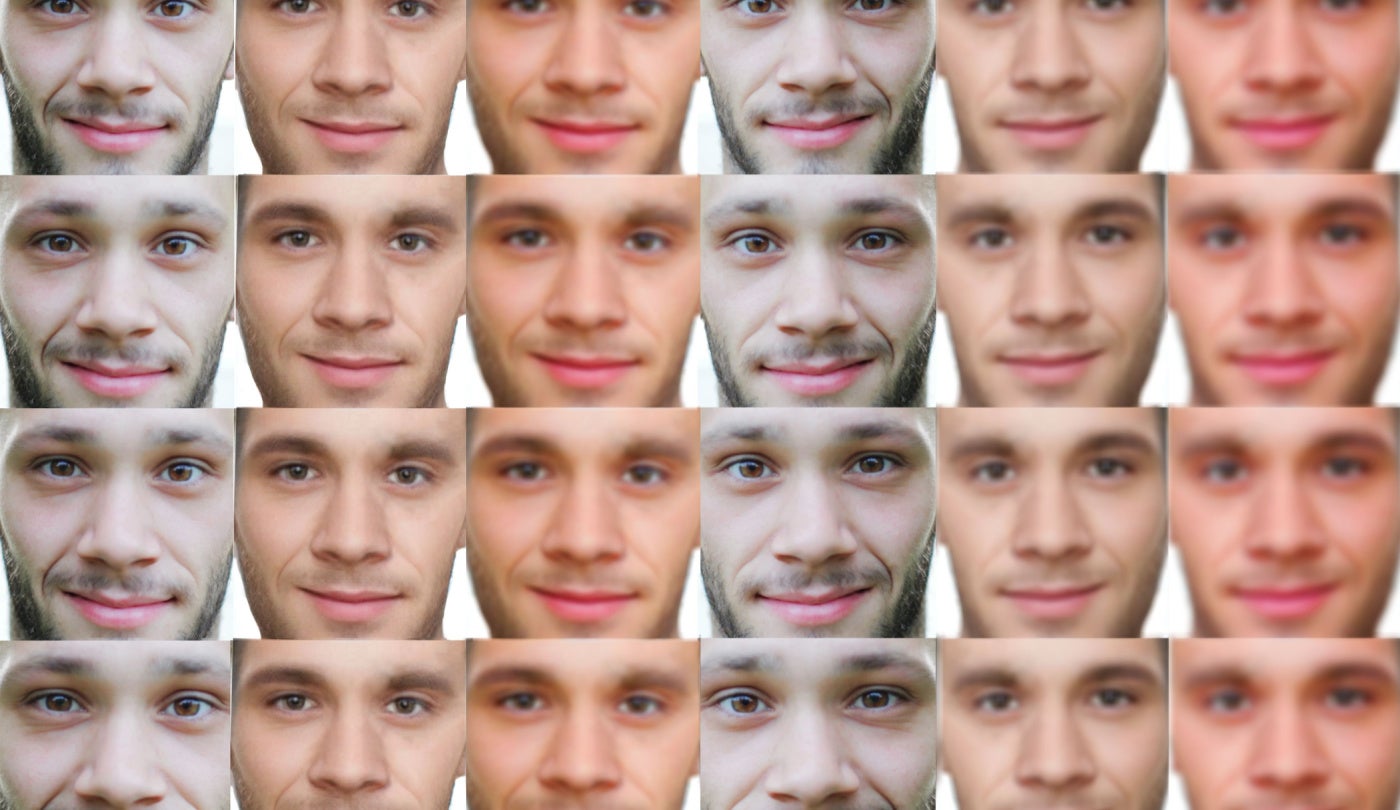

The highest usage of AI in online cyberattacks was using AI generated imagery and video in spreading disinformation.

The release of multiple popular generative AI tools within the last year has led Mandiant to estimate that generated content will accelerate threat actors’ interest in the technology.

Mandiant believes that generative AI will drastically increase the scale at which threat actors can attack information systems, as well as provide bad actors with realistic generated photos and videos towards deceptive ends.

The company also notes that the ubiquity of generative AI will allow threat actors with limited resources and skills to create higher quality fabricated content. Hyper-realistic generated content, says the firm, may have a stronger persuasive effect even on targets who would previously not have fallen to such attacks.

Whilst rare, Mandiant already point out several instances where deepfake technology has been used to spread misinformation.

In March 2022, shortly after the Russian invasion of Ukraine an AI generated video of Ukrainian President Volodymyr Zelenskyy circulated online.

For the average internet user unaware of the full skillset of AI, the video appears to show President Zelenskyy surrendering to Russia.

Whilst previous analysis of fraud shows that the elderly population were at the highest risk of online fraud and cyberattacks, increasingly realistic AI generated images and videos puts even the savviest of internet users at risk.

GlobalData estimates worldwide cybercrime to reach $10.5trn by 2025.

As AI enables more realistic scams via deepfake content or vishing, the demographic at risk of online attacks could become wider than ever before.

MIT’s Media Lab has already begun work on a project to help internet users recognise AI generated content.

The lab hypothesised that the more audiences are exposed to deepfake video content, the easier it will become for them to recognise it in the future.

To test this, the lab has set up a website called Detect Fakes to display thousands of high-quality AI generated videos. The website allows users to guess at whether a video displayed is real or generated as well as rating users’ confidence in their decision.

For future reference, the lab advises users to look at a person’s face during a video when determining if it is real or not.

However, Mandiant’s warning could mean that future internet users will have to adapt to using the internet in a way that is constantly questioning where content is from and its authenticity.

Whilst there has already been an online shift to react to fake news, users will now also question if the person on their screens are human.