In a bid to become the powerhouse behind the next generation of AI infrastructure, AMD has announced its latest accelerator and networking solutions.

Launching the products alongside the fifth generation of EPYC processors and the third generation of Ryzen processors, AMD has revealed Instinct MI325X accelerators, and Pensando networking engines: the AMD Pensando Salina DPU (for the front end) and Pensando Pollara 400 NIC (for the back end).

Access deeper industry intelligence

Experience unmatched clarity with a single platform that combines unique data, AI, and human expertise.

Speaking at the company’s ‘Advancing AI’ event last week, CEO Lisa Su highlighted the importance of network infrastructure in the company’s growth plan, as it looks to create optimised AI solutions at the system, rack and data centre levels.

She told attendees that “putting together all of these world-class components designed to complement each other makes for great solutions.”

Her ambitions have been echoed by Forrest Norrod, executive vice president and general manager at Data Center Solutions Business Group, who said that “AMD underscores the critical expertise to build and deploy world class AI solutions.”

The Instinct MI325X is AMD’s newest accelerator series was launched with the promise of the AMD Instinct MI350 accelerators to come in the second half of 2025. The Instinct MI325X accelerators are built on the CDNA 3 architecture, and will offer 1.8 times the capacity, and 1.3 times the bandwidth of Nvidia’s H200, as well as 1.3 times the peak theoretical FP16 and FP8 compute.

US Tariffs are shifting - will you react or anticipate?

Don’t let policy changes catch you off guard. Stay proactive with real-time data and expert analysis.

By GlobalDataShipment of the accelerators are expected to be ready by Q4 2024, and will be available from a broad set of platform providers from Q1 2025. These will include Dell Technologies, Eviden, Gigabyte, Hewlett Packard Enterprise, Lenovo and Supermicro.

Talking about the future, Su said: “We’re looking forward to the continued optimisation effort. It’s not just for the 300X, but also for the new 325X and upcoming MI350 series. We’re excited about the compute memory uplifts we’re seeing with these products.”

In accordance with AMD’s annual roadmap, the MI350 series will be unveiled next year. The product’s improvements will include drive memory capacity, with 288GB of HBM3E memory per accelerator.

The Instinct MI350 accelerator series will be based on CDNA 4 architecture, and AMD expects that the GPU will offer a 35 -fold improvement in inference performance compared to CDNA 3 chips.

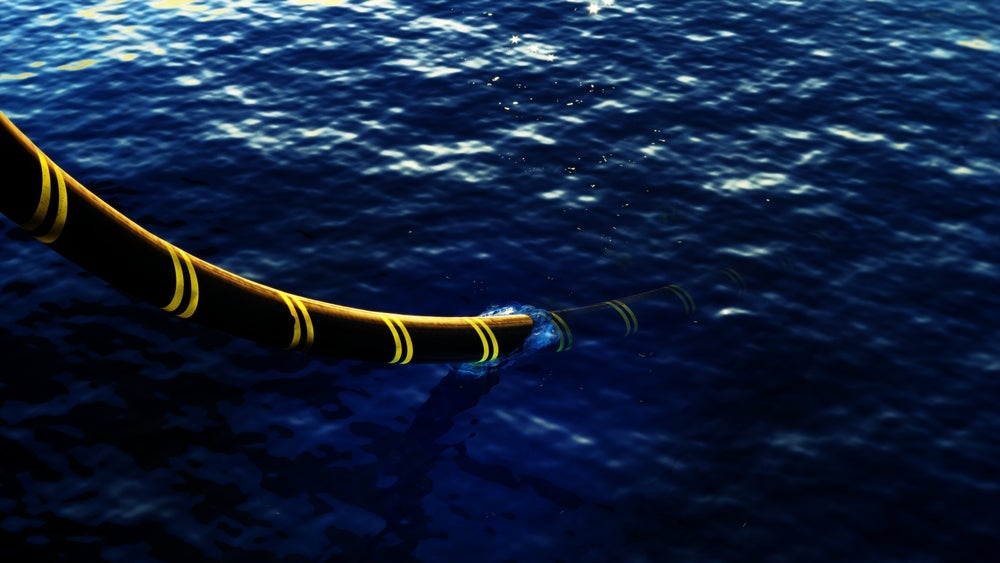

Announced alongside the Instinct MI325X accelerators, AMD also revealed its networking solution: its Pensando platform for hyperscalers, split into two parts. On the front-end is the Pensando Salina DPU; on the back end: the Pensando Pollara 400 – the industry’s first Ultra Ethernet Consortium (UEC) ready AI NIC.

The Pensando Salina DPU represents the third generation of AMD’s programmable DPU, and delivers data to an AI cluster. In comparison to the last generation, it promises to deliver twice the performance, bandwidth and scale.

The Pensando Salina DPU can support 400G throughput, offering faster data transfer rates. It will enable optimised performance and efficiency, as well as scalability for data-driven AI applications.

Meanwhile, the Pensando Pollara 400 will provide performance, scalability and efficiency in accelerator-to-accelerator communication. Powered by the AMD P4 Programmable engine, it represents the first UEC-ready AI NIC, and supports the next-generation RDMA software.

The AMD Pensando Salina DPU and AMD Pensando Pollara 400 are sampling with customers in Q4 2024 and are expected to be available for purchase in the first half of 2025.