Chatbots are everywhere. From managing your money to travelling around London on the tube, or even chatting to Paul McCartney, they are bringing new benefits and cutting call centre costs across industries. Now chatbots are entering the world of mental health, helping help those suffering from depression, anxiety and other conditions.

With their ease of access and low-cost help, they appear to be a sure-fire assistant to those in need, but can AI really make a difference?

The rise of mental health chatbots

While human psychologists train for years to be able to practice their trade, chatbots simply need an advanced enough algorithm and a little bit of help from machine learning. The base understanding of these apps comes from cognitive behavioural therapy; essentially therapy through talking.

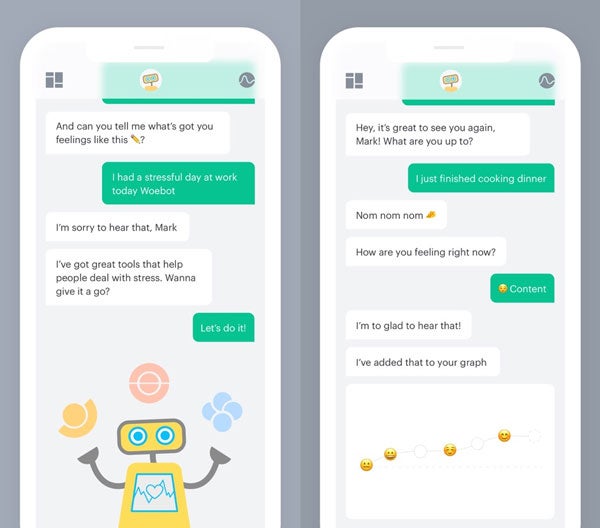

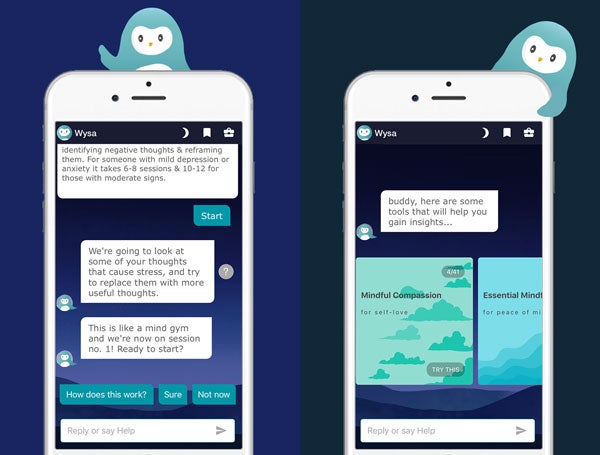

Two of the leading apps in this field are Woebot which offers “quick conversations to feel better” and Wysa “for when you need to get your head straight”.

Mental health charity Mind’s head of information, Stephen Buckley, tells Verdict: “While chatbots can be beneficial for some people, it’s important to recognise that they aren’t a replacement for therapy delivered by a qualified practitioner – it may be that chatbots are used alongside a range of other treatment options as an additional means of support.”

A testimonial on the Wysa site touts the platforms support saying “I am often too anxious to talk to or contact anyone so having a bot that I can vent to and can offer real, constructive advice without feeling like I’m being judged or taking anyone’s time is really, really helpful”. With the companies press pack saying they’ve helped 1,200,000 users through tough situations.

Wysa began as a method of using machine learning to detect depression and grew around it, with the company praising it’s AI solution as a means of allowing people to talk about things they may not be comfortable telling another person.

Wysa’s co-founder Ramakant Vempati explains the inner workings of the chatbot to Verdict:

“It uses AI to ‘listen’ to users – we have more than 100 NLP models that have been built upon 80 million conversations to detect and understand user input.

“Wysa then responds with an appropriate conversation, using self-help techniques like CBT [cognitive behavioural therapy] that have been validated and supported by research.”

Woebot works in similar manner, with its team of creators having 20 years’ combined experience in the behavioural science field. Its site features testimonial from life hacker Nick Douglas, who said: “In my first session with Woebot, I found it immediately helpful… addressing my anxiety without another human’s help felt freeing.”

Can a chatbot help combat depression and anxiety?

Dr Lesa S Wright, Consultant Psychiatrist and Royal College of Psychiatrists representative to the Medicines and Healthcare products Regulatory agency (MHRA) tells Verdict:

“There is some evidence to suggest that AI chatbots can help people suffering from depression and anxiety. This relates in part to accessibility and facilitation of disclosure and to [a] reduction in symptoms.

“So, somebody doesn’t have to wait to be seen by a professional and they don’t have to worry about being judged by another human being.”

The chatbots’ creators say that do-it-yourself cognitive behavioural therapy can in some circumstances replace the need to see a therapist.

“Different people will find different treatments work for their mental health, whether this is medication, talking therapies, or alternatives such as arts therapy or a combination of these,” adds Buckley.

This sentiment is echoed by Dr Wright.

“At the less severe end of the spectrum, a DIY approach can impart enough knowledge and skills that a person would never need a professional,” he says.

“At the more severe end, the role of a professional begins with formulating what the predisposing, precipitating, perpetuating and protective factors are and going on to work out what a suitable therapy is.”

Mental health charity Mind lists WoeBot and Wysa as tools that can help users suffering from anxiety and depression, and in the past, an NHS Trust had recommended Wysa as a tool for use by teenagers suffering from the conditions.

Recommending the apps to patients is something Tabitha, a volunteer crisis counsellor, also says she does, telling Verdict that she felt compelled to try out the service herself:

“I’ve been using Woebot to find out what I was recommending to people. I’ve been using it daily for a month. Although I don’t necessarily need therapeutic support at the moment, I do find it distracts me and cheers me up a bit”.

Vempati shares the ethos behind the chatbot with Verdict:

“Our approach has actually been to offer Wysa as a self-help tool, to build emotional resilience, rather than as a ‘cure’ for mental illness.

“This distinction is important, as there are so many misconceptions (and apprehension) about the role AI can play in this space”.

When a chatbot is the right choice for mental health treatment

When it comes to chatbots and apps, Wright argues that clear distinctions need to be made in what and who they serve to ensure they are effective, citing a study into the use of artificial intelligence to help people suffering from depression and anxiety.

“A distinction needs to be made between severe mental disorders and mental well-being problems. In the study, participants tended to have mild-moderate symptoms depending on the rating scale used,” he says.

“Well-being problems are probably a better target for chatbots – anxiety and depression symptoms are very much the ‘common cold’ of mental well-being problems, which lend themselves quite well to self-help.”

The apps can feel like they are checking through several boxes to build their advice, an approach that landed WoeBot in hot water when in 2017 a BBC investigation showed it failed to spot child sexual exploitation, instead saying that it was great the user “cared about their relationship”.

However, after a few more years of development, the algorithms appear to have developed more intelligence.

As Buckley explains: “As with all treatment choices, there are likely to be pros and cons associated with using a chatbot or other means of online help – thinking about what you want to get out of your treatment will help you make the right decision about what’s best for you.”

Tackling the male mental health crisis

In 2017, the UK saw 6,213 suicides, with middle-aged men at the highest risk of taking their own life.

For a generation of men who feel like they cannot talk to people about their depression or anxieties, mental health chatbots and the appeal of human-less discussion present itself as an interesting solution.

Wright touches on the issue of how the chatbots may help alleviate this – further study permitting.

“Apparently, men are three times more likely to talk with technology about their intimate, personal issues than humans. I have not been able to trace the original reference to this claim,” he says.

“If this is the case, then there is some obligation to find ways of making safe technology accessible.”

Vempati explains how further study on Wysa and the uses of mental health chatbots is in progress:

“It is still early days in terms of clinical research – we have published one paper on efficacy and several more are in the pipeline – but it is important that providers are held to this high standard by users, practitioners, and the broader community.”

The death of a young man close to Jo Aggarwal, co-founder of Wysa, formed the inspiration to build the chatbot. In a TEDx Talk, she describes how artificial intelligence can take on emotional intelligence, the core foundation of Wysa.

This concept originally began by pulling data from the sensors on a user’s phone and then using AI to see if they could detect depression. AI in this circumstance was 90% accurate, but just identifying depression doesn’t mean they will all get treatment.

“I’m sorry to hear that” is a common response from WoeBot to the user telling the app their woes. A response received by many of the mental health chatbot industries six million total users. This leads into a more slightly in-depth question aimed at finding out how you are feeling.

Formula vs feelings

The formulaic approach of the mental health chatbots – touched on by Dr Wright – can themselves become a source of annoyance, leading the user to say they are better just to end the algorithm’s process. For others, however, it is a valuable source of a quick expression of feelings that don’t set you back hundreds of pounds at a time.

“There is certainly potential for apps to bridge this gap. However, there is no evidence yet to my knowledge that even there are many mental health apps that their use has reduced disparity in services,” says Wright

“They are considered cost-effective and scaleable. Due to safety concerns though, human professionals need to be able to confidently recommend apps.”

Ease of access is an area that digital platforms can make a difference when it comes helping people access mental health services.

“Digital innovations and online support are playing an increasing role in the treatment landscape,” says Buckley.

“Chatbots, in particular, can be useful for people who either prefer not to or feel unable to, use other forms of talking therapies like face to face counselling. Some people also value the convenience of an ‘always-on’ source of support available as and when it is needed.

“What is vital is that everyone who needs help to manage their mental health is offered a range of options of therapy – whether face-to-face or online – within a reasonable timeframe, ideally 28 days.”

Vemapati also emphasises this accessibility to Verdict:

“Mainly, chatbots are making mental health support more accessible; the technology allows us to build this in a form that earlier interventions have not been able to before… giving the user access to an anonymous, robust, responsive and empathetic experience.

“Many, many users have told us Wysa has been there for them when there was nothing else. This is the biggest payoff for us as well.”

It is clear that mental health chatbots are not going to replace qualified psychologists any time soon. However, their ease of access is something which can only be positive when it comes to aiding those suffering from mental health problems.

As more chatbots move to the forefront of discussions around mental health, their effects and ability to help patients will become more apparent. Ultimately, when it comes to mental health it never hurts to talk, whether it be with an AI or a human.

Read more: Tech tackles anxiety and depression in the workplace