Pro-Kremlin bots have shared dozens of falsified videos on social media showing Hollywood celebrities criticising Ukraine and its President Volodymyr Zelensky, according to news verification analysts.

The report by News Guard names Adam Sandler, Emma Stone and Vin Diesel as actors targeted by the videos, which have been doctored by Doppelganger, a Russian misinformation network named for its tactic of crafting counterfeit versions of reputable media outlets.

All the videos show real interviews but are dubbed in French or German with words the actors did not say.

One video shared on X on 16 April shows Adam Sandler speaking with Brad Pitt (dubbed in French), saying: “Buying [Nazi propaganda minister Joseph] Goebbels’ real estate, and then that of Charles III, shows that Zelensky is a person without principles … it’s clear from his life, especially since he became president, that he cooperates with Nazis.”

It was reposted more than 660 times, despite the account user only having ten followers and no previous activity.

In another video released on the same day, Emma Stone seemingly describes Ukrainians as “little pigs from the back end of Europe” – a common anti-Kyiv slur in Russia.

This epitomises how “Russian intelligence has always prioritised quantity over quality when it come to the spread of misinformation”, according to Carolina Pinto, thematic analyst at GlobalData.

“The idea is that a user may not believe a piece of misinformation, but this may change if they are fed the same misinformation repeatedly,” Pinto tells Verdict.

“Eventually, a user’s online community will start interacting with the misinformation. of a sudden, the misinformation is not being spread by bots but also by their friends and family, making the misinformation all the more believable,” she adds.

A war of (mis)information

The Doppelganger network has intensified as Russia’s invasion of Ukraine continues into its third year. More than 500,000 people have been killed or wounded in combat.

While the frontline has been the Russia-Ukraine conflict’s main theatre, there has also been a simultaneous war of information.

Moscow has made continuous attempts to sway public opinion of Ukraine to the end of reducing military support.

In December 2023, a BBC report found that thousands of fake TikTok accounts had garnered millions of views sharing dubious claims that senior Ukrainian officials had bought luxury villas or cars abroad following Russia’s invasion. The videos were a factor in the firing of Ukrainian Defence Minister Oleksiy Reznikov, according to his daughter Anastasiya Shteinhauz.

Propaganda and fake news stories have also attacked Ukraine’s right to exist, alleged its military personnel have neo-Nazi ties, and accused Nato of building up army infrastructure in Ukraine to threaten Russia.

Such information has been spouted by President Putin himself. In 2021, he infamously wrote a 5,000-word essay outlining Russia’s intellectual and sovereign claims over Ukraine, a harsh foreshadowing of the invasion.

In February, Putin began his interview with US talk show host Tucker Carlson in February with a half-hour lecture on Russian statehood and Ukraine’s ‘artificial’ origins.

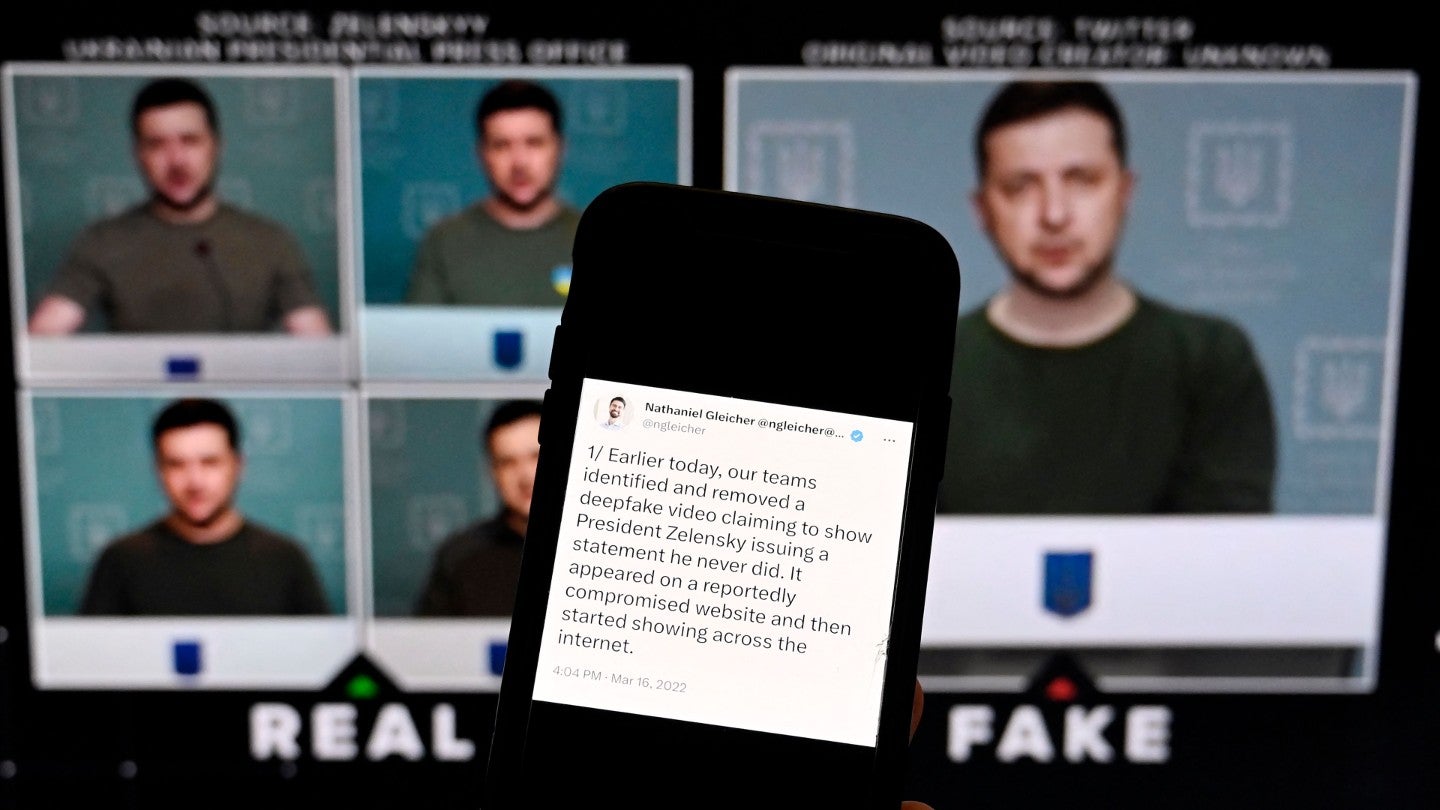

Led by Zelensky, Ukraine has attempted to counter the deluge of digital Russian propaganda.

The Ukrainian premier has released near-daily updates across X (formerly Twitter), Facebook, Instagram and Telegram on the state of the conflict, appealing to the ‘social media age’ with widely shared selfie videos.

Initial concerns that Zelensky, a former TV comic, was an unsuitable wartime leader were assuaged by his ability to influence the information war on camera.

As the Russia-Ukraine war grinds on and technology develops, the quality of misinformation will improve, says Jordan Strzelecki, thematic analyst at GlobalData.

“Advances in text-to-video large-language models (LLMs), which are relatively basic compared to their text-to-image counterparts, will bring a new dimension to the misinformation war in the conflict,” Strzelecki tells Verdict. “Extremely realistic videos will be generated by actors from both sides, presenting added risks for escalation.”

AI driven misinformation on the rise

Misinformation and LLMs, a primary conduit of generative AI, have risen hand-in-hand.

LLMs have an unprecedented capacity to mass produce misleading digital content; the wide reach of Doppelganger’s fake videos and news is largely powered by AI bots.

Yesterday (30 April), the EU announced an investigation into Meta’s alleged failure to tackle misinformation and deceptive advertising – and have named the Doppelganger network as a primary focus.

Concerns of social media platforms being inundated with misinformation continue to grow as AI develops at an extraordinary rate.

“When Elon Musk spoke to Rishi Sunak last November, Musk said the power of AI is improving five- to ten-fold every year,” Strzelecki says. “This means in 12 months, ChatGPT and other LLMs could potentially be ten times as powerful as they are today. In two years’ time, they’ll be 100x as powerful. And in three years, 1000x as powerful. With this, misinformation generated with LLMs will only become more sophisticated and believable.”

In a year where half of the world’s population will vote in elections, AI-disseminated misinformation is one of the most consequential global risks.

Governments have ramped up efforts to combat online misinformation, with MPs in the UK calling for a step-up in action last month.

Attention now turns to the efforts of governments and tech giants to curb state-backed misinformation networks like Doppelganger. Lying in the balance is the digital theatre of a war which has claimed hundreds of thousands of lives.