Deepfakes are visual or audio content manipulated or generated using artificial intelligence to deceive the audience.

The technology can mimic a person’s voice or facial features using only a photograph or short audio recording. Fake content is commonly used on social media platforms for comedic purposes, but there is a darker side.

As the technology evolves, criminals are using it to conduct sophisticated cyberattacks on unsuspecting individuals.

The illusion of deepfakes

A common cybersecurity risk posed by deepfakes is fraud. Cybercriminals are adopting deepfake technology to create meticulous scenarios that can then be used to conduct social engineering attacks.

For instance, a finance worker at a multinational company was deceived into paying $25m by an elaborate scam involving a video call in which everyone aside from the victim were deepfake recreations.

The victim claimed they were suspicious initially, but their doubts were allayed after they saw realistic images of team members.

US Tariffs are shifting - will you react or anticipate?

Don’t let policy changes catch you off guard. Stay proactive with real-time data and expert analysis.

By GlobalDataIndividuals have also been tricked with deepfake audio over a phone call. This can lead to victims leaking sensitive information or even changing their behavior. In early 2024, thousands of New Hampshire residents received a fake call that sounded like former President Biden, discouraging Democrats from voting in the upcoming presidential election.

Technology fooling technology

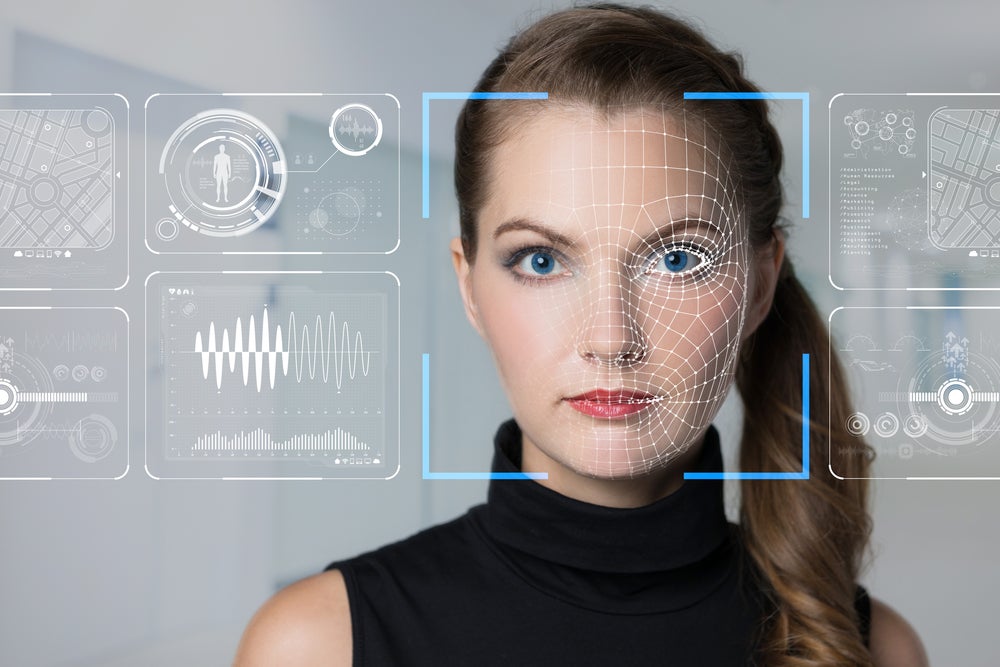

Deepfake technology also threatens biometric security. Individuals and organisations employ biometrics to keep their data safe. A common example is facial recognition to unlock a mobile device.

Sophisticated deepfakes can undermine biometric security measures. For example, high-quality deepfake videos can trick facial recognition systems into granting intruders’ access to systems. Similarly, audio-based security can be bypassed by deepfaked audio, which poses additional threats to voice verification models.

Identifying what is real and what is fake

Deepfake AI tools are widely available. The ease of access to these tools is concerning, as these types of attacks can be frequently replicated. Trusting what is seen and heard digitally becomes a constant worry for individuals and organisations. According to the UK government, 8 million deepfakes will be shared globally in 2025, up from 500,000 in 2023.

Countermeasures must be developed and employed to mitigate the risks posed by deepfakes. Organisations and governments are investing in robust solutions to reduce the number of deepfake-related crimes. AI is also used to analyse inconsistencies in deepfake content, such as unnatural blinking patterns, image distortion, and voice frequencies. Legislative efforts are also being deployed to regulate the use of deepfake tools, introducing new bills to reduce its malicious application.

The US has enacted at least 50 bills regarding deepfake content creation and use, including making it a crime to unlawfully disseminate or sell images of another individual created by AI. Public awareness and education are other crucial factors that can minimise the impact of deepfakes, enabling individuals to identify and respond to an attack effectively.

Be wary, be cautious: The use of deepfakes is increasing

Seeing is believing—or so we thought. Sharing a video call or hearing a familiar voice became the gold standard of authenticity thanks to trusted digital communication methods.

But what if that smiling face or friendly voice was a carefully crafted illusion?

The use of deepfakes is increasing for both harmless and malicious applications. It poses evident cybersecurity risks, which demand prompt countermeasures such as implementing detection tools and creating legislative frameworks.

However, no security measure is 100% effective. All individuals must be vigilant to avoid falling victim to these types of cyberattacks.

The phrase ‘seeing is believing’ is attributed to the 17th-century English clergyman Thomas Fuller. The full quote was actually “seeing is believing, but feeling is the truth.” In other words, if you see it, but it still doesn’t feel right, don’t believe it. Trust your instinct.