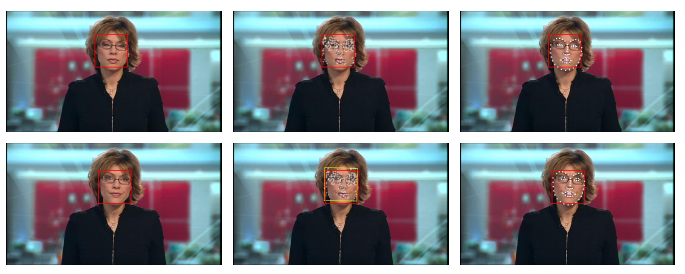

Oxford University scientists have created a new software programme that can lip-read better than humans.

The new artificial intelligence (AI) software, named Watch Attend and Spell (WAS) has been developed by researchers at the university in collaboration with Google’s DeepMind.

The team, which included Professor Andrew Zisserman and Joon Son Chung at Oxford and Andrew Senior and Oriol Vinyals at DeepMind, wanted to create a machine that has the potential to lip-read more accurately than people to help those with hearing loss.

This lead to the creation of WAS, which uses computer vision and machine learning to learn how to lip-read. It was established with a dataset made up of more than 5,000 hours of TV footage, from six programmes including BBC Breakfast, Newsnight and Question Time. The videos contained more than 118,000 sentences in total and a vocabulary of 17,500 words.

WAS was then put into action and the team compared the ability of the machine and a human expert to register what was being said in a silent video. It was found that the software was able to recognise 50 percent of words in the data set without error, compared to the human expert who could only recognise 12 percent.

What is even more remarkable is the mistakes that the machine did make were only minor, such as missing an “s” at the end of a word, or a single letter misspelling.

Joon Son Chung, lead author of the study, said:

Lip-reading is an impressive and challenging skill, so WAS can hopefully offer support to this task – for example, suggesting hypotheses for professional lip readers to verify using their expertise.

“There are also a host of other applications, such as dictating instructions to a phone in a noisy environment, dubbing archival silent films, resolving multi-talker simultaneous speech and improving the performance of automated speech recognition in general.”

Chris Bowden, head of technology development at the National Deaf Children’s Society, told Verdict:

“New technologies like this could have a profound effect on the lives of deaf young people in the future. Innovation in AI lip-reading technology has the potential to greatly enhance speech-to-text technologies. This could help improve speed and accuracy and also reduce the costs of subtitling in TV, film or even communications in the classroom or in noisy places.

“Developments like this could make a real difference the lives of the 45,000 deaf children in the UK in many ways and we look forward to seeing how it develops in the future.”

The impact of AI in the healthcare space can be seen in many different ways. IBM Watson is being used to help diagnose and treat oncology patients, AI apps like Babylon are being used to support GPs, and the technology is empowering drug development.