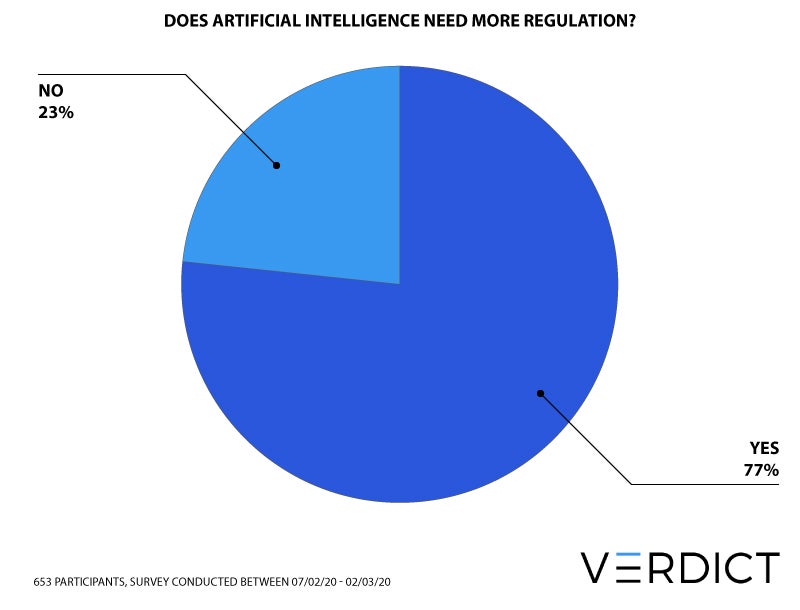

Artificial intelligence (AI) does not currently have enough regulation, according to the overwhelming majority of Verdict readers, many of whom work in the technology industry.

In a poll conducted across the Verdict website between 7 February 2020 and 2 March 2020, 77% of the 653 respondents said that they felt AI needed more regulation, a total of 502 people.

Meanwhile, just 23% (151) said that AI did not need more regulation.

Why AI regulation support is rising

Why AI regulation support is rising

The results come as AI faces growing concerns about its applications and use, which have led to increased calls for regulation.

While, as with many nascent technologies, AI has been left to develop without significant regulation so as to avoid innovation being stifled, potential issues and concerns around its misuse have become increasingly prevalent.

In particular, there have been fears around bias, particularly in machine learning and facial recognition applications.

How well do you really know your competitors?

Access the most comprehensive Company Profiles on the market, powered by GlobalData. Save hours of research. Gain competitive edge.

Thank you!

Your download email will arrive shortly

Not ready to buy yet? Download a free sample

We are confident about the unique quality of our Company Profiles. However, we want you to make the most beneficial decision for your business, so we offer a free sample that you can download by submitting the below form

By GlobalDataThe former has been applied to a host of uses, but bias as a result of insufficiently broad training data has hampered its effective application in some areas.

For example, projects exploring the use of AI in recruitment have been found to unwittingly discriminate against women and people of colour, while a widely used healthcare algorithm in the US was found to be biased against black people.

Meanwhile, despite it increasingly being used in real-world settings, such as by police forces, facial recognition has also found to be biased against people of colour.

There have also been concerns that businesses are not considering ethics enough with their deployment, with PwC research finding that just one in four businesses prioritise ethics when they deploy AI.

EU considers tighter AI regulation

The industry is showing growing attempts to fix the problem, with calls from organisations such as the Confederation of British Industry (CBI), and projects to assist with the issue released by organisations such as IBM.

However, as AI becomes increasingly widespread, and concerns being increasingly raised about companies’ transparency over their use of the technology, regulation now looks increasingly likely.

The EU is currently considering an outright ban on the real-world use of facial recognition technology until it reaches a greater level of maturity, while a report published in February by the European Commission proposes tighter AI regulation.

But while there may be public support for regulation, the report walks a narrow line between effective regulation and preventing companies from effectively competing with other leaders in the AI space.

“Some may say this plan to build a trusted ecosystem for AI is long overdue, but you can see from the paper perhaps why this has taken so long. There is a huge mesh of legislation that impacts – and could impact – AI, and a broad set of regulators that admit they haven’t got the right skills in-house to regulate the use of AI. There are also some legislative holes that need filling,” said Georgina Kon, TMT partner at law firm Linklaters, when the report was published.

“Even so, if the EU is able to achieve its vision of engaging with industry in high-risk sectors where both the potential risk and rewards are huge to build the right legislative and voluntary regulation for AI, it will have given itself a huge competitive edge in the digital economy.”

AI regulation may have support, but the challenge of making it a reality still remains.

Read more: Researchers develop fairer algorithm to tackle AI bias