The increasing ease with which the technology can be used for scraping data from across the internet is driving growing scrutiny of artificial intelligence’s (AI’s) impact on privacy, a new report outlines.

GlobalData’s Data Privacy report states that the widespread adoption of generative AI across industries has increased the relevance of data privacy. It notes that regulators globally have proposed privacy-focused policies and legislation to manage the risks associated with the emerging technology.

Access deeper industry intelligence

Experience unmatched clarity with a single platform that combines unique data, AI, and human expertise.

“Generative AI involves using machine learning algorithms to create new content, such as images, music and text,” it says. “Some of the best-known examples of generative AI are OpenAI’s ChatGPT and Google’s Gemini. Generative AI algorithms require large amounts of data. This data is scraped from various locations across the internet, potentially resulting in data from individuals or organisations being used without consent.”

The report explains that concerns surrounding data privacy, in particular in relation to AI, have prompted regulators globally to act. It also acknowledges that, despite such efforts, “rogue AI could make privacy legislation difficult to enforce.”

AI privacy regulation

Nonetheless, the report cites the EU’s AI Act in particular as a “landmark regulatory framework” that addresses privacy concerns by categorising AI systems based on risk levels and imposing strict requirements for high-risk applications. It adds of the EU’s General Data Protection Regulation (GDPR) that it is perhaps the best known privacy regulation globally and has driven a shift towards improved protections.

Of the AI Act, another new and complementary GlobalData report, The Global AI Regulatory Landscape, says: “The EU is the global leader in AI regulation. Its first-mover advantage means it can set the agenda and define the terms of the discussion for all nations. The next few years will be decisive for the EU’s enforcement of its AI Act, which could demonstrate to the world that a risk-based approach targeting general-purpose AI models can work.”

US Tariffs are shifting - will you react or anticipate?

Don’t let policy changes catch you off guard. Stay proactive with real-time data and expert analysis.

By GlobalDataThat report also notes that “there is no evidence that a higher level of regulation is detrimental to innovation” and, indeed, that “legal certainty is paramount for companies that need to make investment decisions on AI”.

AI and privacy in the US

However, speaking at the AI Action Summit in Paris on 11 February, US Vice President JD Vance said on behalf of the new Trump-led government in the US: “We believe that excessive regulation of the AI sector could kill a transformative industry just as it’s taking off and will make every effort to encourage pro-growth AI policies.”

While the Data Privacy report acknowledges that greater scrutiny of privacy practices requires increased action and investment from businesses, it adds that failure to meet standards “can result in severe financial and reputational damage.” It notes too that the lack of a federal data privacy law in the US will raise costs for businesses as they will ultimately have to comply with multiple different sets of state-level regulations.

Vance added of the EU’s legislation for preventing illegal and harmful activities online and the spread of disinformation: “Many of our most productive tech companies are forced to deal with the EU’s Digital Services Act and the massive regulations it created about taking down content and policing so-called misinformation.”

Vance’s comments should be taken within the context that the new US administration is taking an ‘America First’ protectionist approach to global relations and is a frequent source of misinformation. Like its Digital Services Act, the EU’s AI Act, which came into force in August 2024 and will be fully applicable by 2026, will be relevant for US companies working with or within the bloc.

In contrast, an executive order signed by former President Joe Biden in 2023 in an attempt to give the US a unified national strategy was repealed by Donald Trump on the first day of his second term in office in January this year. A subsequent executive order signed by Trump two days later titled Removing Barriers to American Leadership in Artificial Intelligence is described by the report as “largely aspirational national policy on AI, the specific details of which are far from clear.”

AI and privacy globally

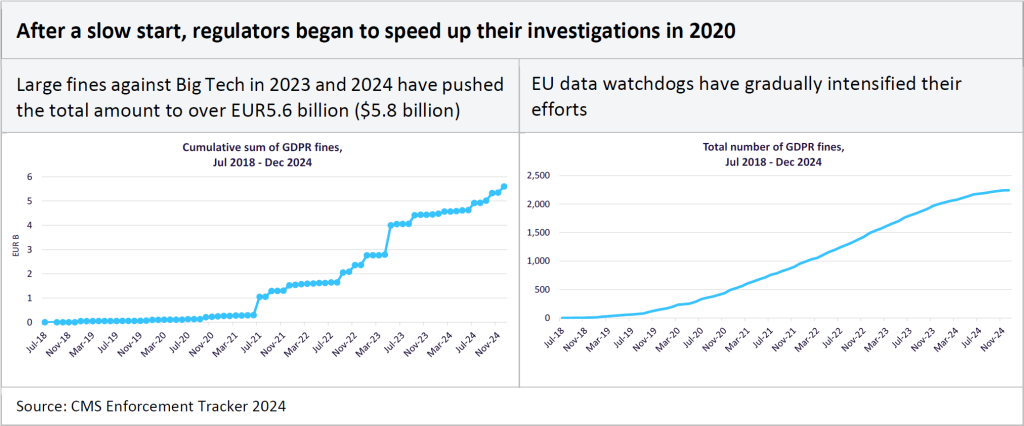

The Data Privacy report notes that the enforcement of data privacy regulations has intensified globally, with fines for non-compliance reaching unprecedented levels, and it counts Brazil, Canada, China, India and indeed the US and being among the countries that have introduced or strengthened regulation in this area.

It adds of the US, however: “The delayed introduction of a federal privacy law created a patchwork of state regulations, raising business costs. The introduction of the American Data Privacy and Protection Act in June 2022 aimed to standardise how companies handle personal data, limiting its collection, processing and transfer to essential purposes. However, the bill failed to advance to the House or Senate due to a lack of support. This has left businesses navigating varied local laws, complicating compliance and international data transfers.”

The report asserts that countries’ differing approaches to regulating AI, “with some prioritising data protection while others prioritise fostering innovation,” will impact the strength of data privacy standards globally, requiring businesses to “navigate complex and inconsistent compliance requirements and adapt to differing regulatory landscapes.”