A fear of what will ultimately destroy humanity grips the world with an iron fist, especially if the notion of what will deal the world its final blow is the unknown, the unfamiliar, and the unmanageable: AI.

The ways that AI has been supposed to destroy us are tenfold. Talks on AI rearranging the biosphere and metamorphosing atoms in a manner incompatible with human life have been heard, with the technology in this scenario eventually ridding itself of its most vicious pest—people. The physical agency of AI equally serves as a fearsome factor in the mortality of the earth, with the likes of GPT-4 becoming smart enough to solve captchas, hoodwinking computers into thinking it a mere mortal.

Feminizing technology

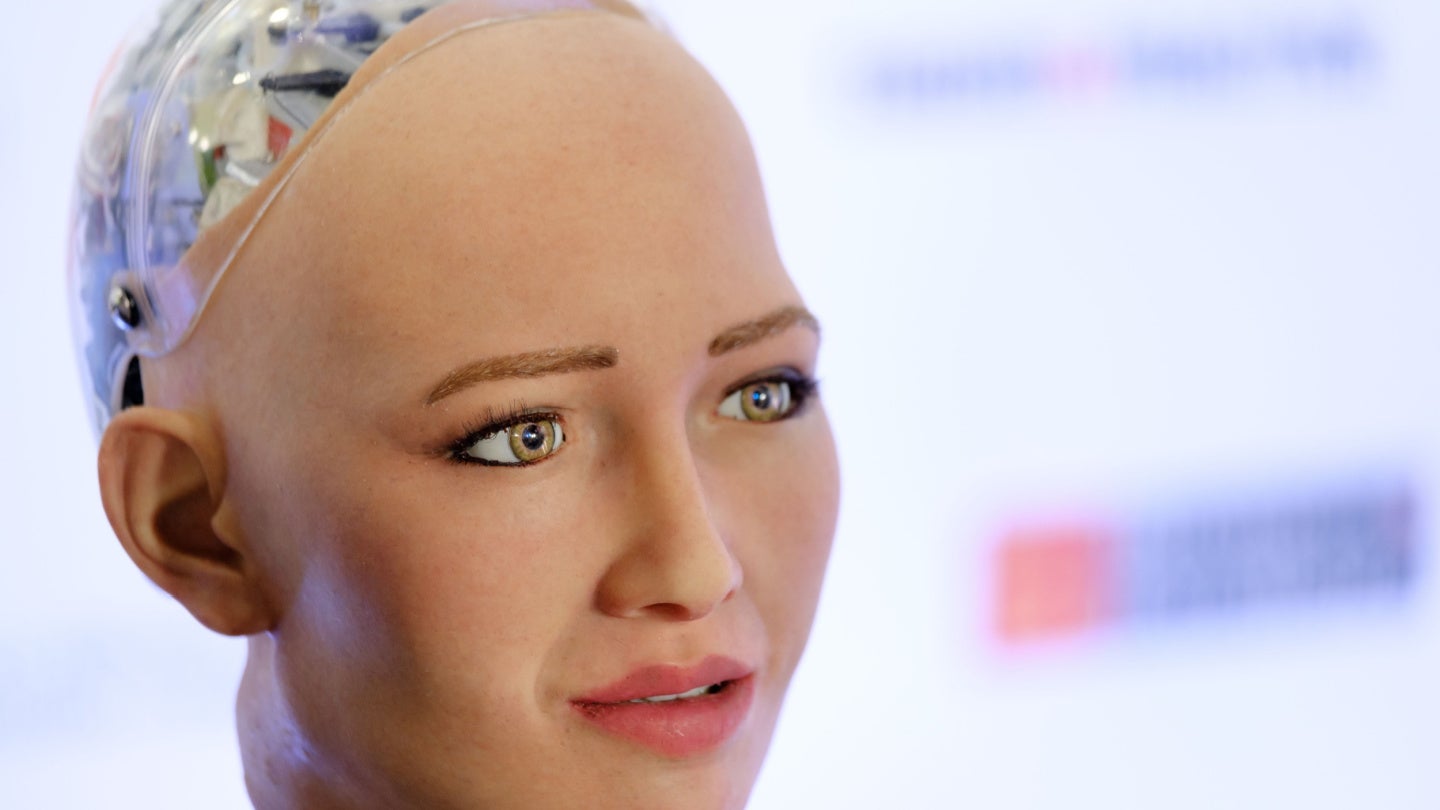

Tech companies have long wracked their brains and scratched their heads, at odds with how possibly they can make their artificial intelligence palatable for the people. How truly could they make AI something that consumers would want to welcome into their homes? The answer has played out across even early versions of AI. Amazon’s Alexa’s dulcet tone has echoed for years across households, while Microsoft’s Cortana has an equally sugary voice. Google Assistant is played by Kiki Baesell.

It would seem the only manner by which AI and robots will not invoke fear is by feminizing them. Femininity will help people not be as afraid of AI as women are considered, supposedly, more emotionally intelligent, comforting, caring, and warm. Essentially, women are seen as non-threatening.

Karl MacDorman, an expert in robotics and human-computer interaction from the University of Indiana, ran an experiment on feelings towards typically female voices versus typically male voices. He found a discrepancy between what people report on questionnaires, and what they feel: women like a female-sounding voice more than they admit, while men claim to prefer a female-sounding voice when they do not care. So, using a female voice for robots and AI is ultimately preferable to all.

AI and the femme fatale

Yet creating AI and bots that are female does not strip back the reality of its dangers. The attribution of femininity to bots strikes a chord with the mental load carried by women: the likes of Alexa and Cortana are often advertised as reminding us of birthdays, dinners, and appointments, and are seen explaining cooking techniques and organizing and ordering groceries.

This sentiment gives way to a sense of female suspicion that has dated back thousands of years, and which has been wrapped up into the idea of the ‘femme fatale’. Long before Scarlett Johannsson voiced the AI ‘Samantha’ in Her, and Rita Hayworth played the eponymous Gilda, old English tales saw the enchantress Morgan le Fay brought to life in Arthurian legend. The femme fatale uses beauty, charm, and deceit to lure men into danger or even death, which strikes a chord with the temptations of AI.

The fact remains that whatever attempts are made to neutralize the perceived threat of AI, it lingers, nonetheless. AI is being deployed in invisible and obscure ways, gathering pace on creating existential risks. These harms are played out daily: for example. the provision of welfare benefits sees some governments using algorithms to eliminate fraud. The consequence of this is a ‘suspicion machine’, which creates biases. These biases are usually against marginalized people, leading to discriminatory outcomes. Ultimately, the feminization of AI—in a bid to depict it as something safe—may be dangerous in more ways than one: not only could it exacerbate feminine stereotypes, but could serve to subconsciously create in the mind of consumers a connection with the femme fatale; seductive and alluring, yet pertinently dangerous.