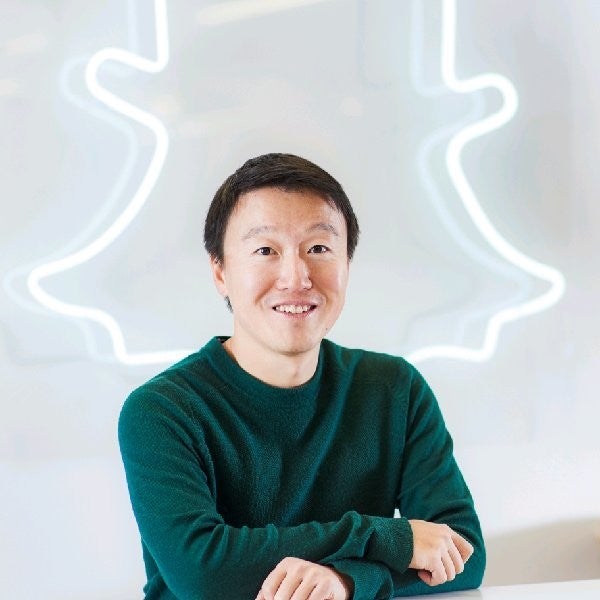

Snap Inc’s head of computer vision engineering, Qi Pan, has the kind of resume that Big Tech companies dream of. Pan holds a first class degree from the UK’s Cambridge University, as well as a PhD in computer vision engineering and has since worked with billion dollar brands including Qualcomm.

Pan was introduced to vision engineering as an undergraduate and became fascinated by the concept, particularly in relation to machine learning and AI. But on a more fundamental level, Pan says, that of all the senses, “if I were to lose one, I would miss vision the most”.

Access deeper industry intelligence

Experience unmatched clarity with a single platform that combines unique data, AI, and human expertise.

For the layperson, computer vision is widely understood to be a form of digital photography — essentially groups of coloured dots that represent the world. “But the computer doesn’t actually understand anything in that image,” says Pan who explains that “computer vision has you take that picture, and then has the computer understand the objects that it’s going to pick out. But that’s only the first stage.”

Eventually the user wants to understand what is happening in the world in real-time to enable interaction with the image. “There’s a difference between processing a single image at a time, waiting for it to process and getting the result, which actually is a lot more of what we see today,” says Pan.

Sending an image over the cloud may take a few seconds. The work being done around real-time computer vision, which illustrates at 30 frames per second, was limited when Pan was starting out. “It wasn’t yet possible to look around the world on a phone or on glasses, or robotic self-driving cars,” says Pan.

A combination of hardware development and the ability to run computer vision in the cloud has enabled much wider adoption of the technology, with real-time access to algorithms and APIs making it both scalable and affordable. “Processers and graphics cards suddenly became so much more powerful,” says Pan who notes, however, that the deep learning and neural network algorithms invented in the 1970s have not changed all that much.

US Tariffs are shifting - will you react or anticipate?

Don’t let policy changes catch you off guard. Stay proactive with real-time data and expert analysis.

By GlobalDataApple was the first company to release a device with a light detection and ranging (LIDAR) sensor when it launched the iPhone 11 Pro in 2019. LIDAR technology essentially measures the time taken for light to sense an object and in this way can build a corporeal sense of objects and their surroundings. It is the technology which has, in part, driven the development of autonomous vehicles. The technology can create “amazing transformation effects” like the ones that users have available on Snap’s Snapchat platform today.

But Snap sought to democratise this feature, says Pan. “We spent time using machine learning to bring this to regular devices without LIDAR.” And that is where the company is using machine learning computer vision which uses regular images to understand the surfaces of objects and their relation to one another.

Computer vision in the spotlight

Pan joined Snap in 2016 and founded Snap’s computer vision engineering function in London, gradually expanding the team over time. It was early days in the evolution of what would follow in the form of Snap’s lenses – or filters – with augmented reality functionality.

Pan’s objective to make the camera smarter so it can overlay the real world with digital content is now well underway. In an age of content and information overload with increasingly frictionless access to the internet, Snap’s focus is now on this last point of access, between the user and the internet. And the use cases for making access to information more frictionless are endless.

To illustrate its utility, Pan explains that instead of using a map app on a smartphone which requires the user to carry and refer to a screen, Snap’s prototype smart glasses automatically overlays mapping onto the user’s real life view, complete with digital navigation arrows within the aperture.

Other use cases include accessing product manuals through Snap’s glasses that could seamlessly identify a button in the real world with instructions overlaid and accessed through wearing the glasses. In this way, augmented reality (AR) could make such processes unrecognisable in future.

However, wearable technology is still in its infancy. Apple’s Vision Pro for example has a price point that is proving a barrier to widespread AR adoption in the short-term. But by developing Snap’s glasses, the company is making a “kind of long term play”, says Pan.

On the prohibitive consumer price point and technology teething problems, Pan notes: “We think the way to get there is through iteration, which is why we released like the glasses.” And Snap is doing so by putting the glasses in the hands of developers so that they may build, test and refine what will become the next generation of wearable vision technology. “We want to take these iterative steps to make sure we get to the right point,” he adds.

AR presents huge market opportunity

According to GlobalData forecasts, AR will become a $100bn market by 2030, up from $22bn in 2022. This growth will be largely driven by AR software, with limited spending on AR headsets and AR smart glasses.

While smartphones are currently the primary device for AR applications today, Big Tech companies like Snap’s competitors are developing products like Snap’s smart glasses, smartphones with AR capabilities like advanced spatial awareness, precise positional tracking, and AI tools. In July, the FT reported that Meta was exploring a stake in Ray-Ban maker EssilorLuxottica as it builds its wearable technology.

The kind of AR that Snap is developing has utility for both consumers and enterprises, according to GlobalData’s Thematic Intelligence 2024 Augmented Reality report. On the consumer front, the report cites consumer targeted developments such as Pokémon Go, Snapchat Lenses, and TikTok Effects with popularising AR as a tool for entertainment and ecommerce.

The adoption of AR by enterprises is also advancing in sectors such as retail, healthcare, and manufacturing, mainly for user experience, training, and remote collaboration. “Businesses will continue to adopt AR in the coming years, but the high cost of headsets and smart glasses will hinder widespread adoption in the short term. Meanwhile, mobile AR and WebAR will increasingly gain prominence in advertising,” according to the report.

Google Glass, Alphabet’s wearable AR product launched in 2014 failed to make an impact. Snap’s prototype glasses lightweight and Pan’s goal is to make them as small and as cheap as possible, with the widest field view and longest battery life possible. “Obviously, some of those things are trade-offs,” he acknowledges. On avoiding the spectacular implosion of the first-to-market, Google Glass, Pan says this is where “the developer cycle and iteration really helps”.

Though Snap’s smart glasses are still in development, the company has over 300 million users of its AR technology over mobile devices, primarily smartphones. Today’s AR is delivered through the selfie camera on a smartphone. “With the glasses, once they’re popular enough, it’s like turning the camera and pointing it in the opposite direction,” says Pan who notes that the AR experience is already present on Snap’s Snapchat platform.

Snap’s free tool, Lens Studio, means consumers and businesses both large and small do not have to be computer vision experts or AR experts. Snap currently has 350,000 lens creators, who have built three and a half million lenses. And these have been viewed trillions of times.

Single developers, branding agencies and corporates can build Snap Lenses to better market their brands to consumers. Retail, in particular, is driving the implementation of AR and has seen widespread adoption for use cases such as trying on watches, clothes and accessories. From a marketing perspective, this is an ideal way to capture the attention of GenZ consumers as they expect to interact with brands in this way, according to Pan.

A new function of computer vision engineering called ray-tracing is helping luxury brands like Cartier to convey the quality of their products. The technology can reproduce the light conditions to show the sparkle of a ring in a physical simulation, mimicking the way light appears to touch objects in the real world.

“You take a light source, you bounce a virtual ray in the computer and try to figure out how light moves to give you a much more realistic look to things like handbags, rings and watches,” explains Pan.

As the technology advances, so too does the creative license of users. New ways to approach marketing, branding, learning and development, enterprise communications and designing the built environment will emerge. And throughout, Pan acknowledges the importance of ‘fun’ in the mix. By using Snapchat, a traveller can take photos of the Eiffel Tower and make it dance, they could see Big Ben without the scaffolding that covered it for four years for repairs and, above all, they can use Snap’s creative tools to suspend the laws of physics and reinvent reality through computer vision.